How to compile SASS/SCSS files when deploying a Django app to Heroku

4 de Setembro de 2018, 0:00 - sem comentários aindaI’m using django-sass-processor to automatically

compile the SASS files in a Django 2.1 project. Everything runs fine locally,

but on Heroku the pages appear unstyled. The problem is that Heroku only runs

./manage.py collectstatic when building a Django app. This simply copies the

static files to the ./staticfiles folder. As the SCSS files weren’t compiled

yet, only the *.scss files end up in that folder.

The solution is simple: customizing how Heroku builds our Django app.

Heroku’s Python buildpack offers two hooks, pre_compile

and post_compile. To use them, we just need to add bin/pre_compile or

bin/post_compile in our app’s repository. For compiling SCSS, we want to use

post_compile, as it will only run after all pip packages are installed.

Create a file in bin/post_compile in the root of your repository with the

contents:

#!/usr/bin/env bash

cd "$1" || exit 1

echo "-----> Compiling SCSS"

python manage.py compilescss --traceback

echo "-----> Collecting static files"

python manage.py collectstatic --noinput --traceback

The first command, cd "$1", changes the current directory to the build

directory passed in by Heroku. Then we just run the compilescss and

compilestatic as needed.

If you commit this file and deploy to Heroku, you should already see the SCSS

compilation working. The only remaining step is a small optimization. We want

to avoid Heroku running compilestatic, as we’re running it ourselves. Simply

run:

heroku config:set DISABLE_COLLECTSTATIC=1 --app <YOUR_APP_NAME>

That’s it! Everything should be running fine, and if you need to add other

build steps (e.g. minifying, compressing images, etc.), just add them to the

bin/post_compile file.

You can see how I’ve done in my Django project on https://github.com/vitorbaptista/lainonima.

Code analysis: How the Diario Oficial project extracts data from gazettes' PDFs

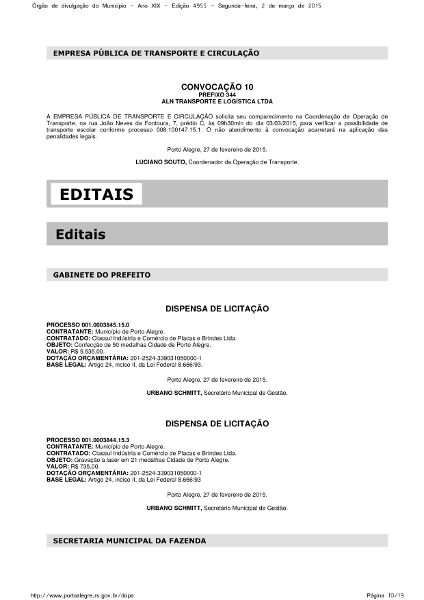

7 de Julho de 2018, 0:00 - sem comentários aindaThe Diário Oficial is a project by the Serenata de Amor Operation to extract the government purchases that had bidding exemption (because they are below a certain value) published in the official gazettes. Their intent is to get this data in a machine readable format, so we can look for suspicious purchases. In this post, we’ll walk through the code to understand how the gazette is parsed and its bidding exemptions are extracted.

Ther code is available on GitHub. The application is split in two main areas: web and processing. The web, written in NodeJS, is responsible for the API and website to visualize the data. The processing component deals with scraping the gazettes, extracting their data, and saving it to the database. We’ll focus on the processing component.

The processing component uses Celery to run its tasks periodically. The

tasks are defined in diario-oficial/processing/tasks.py. It defines

two periodic tasks: parse_sections and run_spiders. The run_spiders runs

every day at 13:00 UTC, finding the gazette’s PDF and saving its text into the

database (using PdfParsingPipeline). The parse_sections

then go over these records, extracting the data from their text.

In this post, we’ll look into the parse_sections task, walking through the

process of extracting the data of a gazette from Porto Alegre.

The initial state

We’re looking into how the parse_sections task behaves when there’s already a

gazette PDF’s text in the database (extracted by the run_spiders task). We’ll

use the gazette 4.955 from Porto Alegre in 2/March/2015,

extracting its bidding exemptions. This is how they look like in the source PDF:

We also have it in textual form extracted from this PDF. In the end, the data in each bidding exemption (i.e. “dispensa de licitação”) section will be parsed and saved into our database.

Step 1: Extract the sections with bidding exemptions

The parse_sections task is defined as:

@app.task

def parse_sections():

# Instantiate object that will update the Gazette model

row_update = RowUpdate(Gazette)

# Run the parsing using SectionParsing and update the model

row_update(SectionParsing)

# Schedule parsing of the bidding exemptions' text

parse_bidding_exemptions.delay()

The RowUpdate class abstracts updating rows in the database. It’s

instantiated by passing a model (Gazette in this case), and then called with

an “executor” class, SectionParsing. Let’s take a look:

class SectionParsing:

def __init__(self, session):

self.session = session

def condition(self):

return 'is_parsed = FALSE'

def update(self, gazettes):

for gazette in gazettes:

territory = PARSABLE_TERRITORIES.get(gazette.territory_id)

if territory:

parsing_cls = getattr(locations, territory)

parser = parsing_cls(gazette.source_text)

self.update_bidding_exemptions(gazette, parser)

gazette.is_parsed = True

def update_bidding_exemptions(self, gazette, parser):

parsed_exemptions = parser.bidding_exemptions()

if parsed_exemptions:

for record in gazette.bidding_exemptions:

self.session.delete(record)

for attributes in parsed_exemptions:

record = BiddingExemption(**attributes)

record.date = gazette.date

gazette.bidding_exemptions.append(record)

This class manages filtering the gazettes to be updated, replacing their

existing bidding exemptions (if any) with the ones just parsed, and then marking

the gazette by setting is_parsed = True, so it won’t be parsed again.

The only gazettes that will be parsed are the ones that:

- Weren’t parsed yet (i.e.

is_parsed == False), and; - We have a parser for its

territory_id(defined in the PARSABLE_TERRITORIES dictionary)

The parsers themselves are defined in diario-oficial/processing/gazette/locations. Let’s check the RsPortoAlegre parser:

class RsPortoAlegre(BaseParser):

def bidding_exemptions(self):

items = []

for section in self.bidding_exemption_sections():

items.append(

{'data': self.bidding_exemption(section), 'source_text': section}

)

return items

# other methods omitted for brevity...

This class goes over the PDF’s text, looking for the bidding exemption sections, and returns a list with their data as:

{

'data': {

'CONTRATANTE': 'Município de Porto Alegre.',

'CONTRATADO': 'Classul Indústria e Comércio de Placas e Brindes Ltda.',

'OBJETO': 'Confecção de 50 medalhas Cidade de Porto Alegre.',

'VALOR': 'R$ 5.535,00.',

'DOTAÇÃO ORÇAMENTÁRIA': '201-2524-339031050000-1',

'BASE LEGAL': 'Artigo 24, inciso II, da Lei Federal 8.666/93.',

},

'source_text': '...' # The original text

}

This is saved into the gazettes.bidding_exemptions attribute.

Step 2: Parse the bidding exemptions

Notice that all attributes in the the bidding exemptions’ data are strings.

For example, the “VALOR” attribute is “R$ 5.535,00.”, instead of a number 5535.

In this step, we’ll clean and parse these values into their specific data types

via the parse_bidding_exemptions task.

@app.task

def parse_bidding_exemptions():

row_update = RowUpdate(BiddingExemption)

row_update(BiddingExemptionParsing)

Here we have RowUpdate, as in the parse_sections task, but this time we’re

updating the BiddingExemption model using the

BiddingExemptionParsing class. Let’s see how it

looks like:

class BiddingExemptionParsing:

def condition(self):

return 'is_parsed = FALSE'

def update(self, records):

for record in records:

territory = PARSABLE_TERRITORIES.get(record.gazette.territory_id)

if territory:

self.update_object(record)

self.update_value(record)

self.update_contracted(record)

self.update_contracted_code(record)

record.is_parsed = True

# other methods omitted for brevity...

It is similar to the SectionParsing we saw in the last step.

It also uses the condition as is_parsed = False, but instead of getting the

gazettes, it gets each of their bidding exemptions. It loops over each of the

exemptions, and if they are from a territory we have a parser for (e.g. Porto

Alegre), it’ll parse its data.

The parsing is straightforward. For example, the update_value() method simply

turns values like “R$ 5.312,94” into the number 5312.94.

Notice that, unlike the SectionParsing class, the parsing is implemented directly in BiddingExemptionParsing. This means that the same code is used by all territories (currently only Goiânia and Porto Alegre). This code will probably need to change in the future, as more and more territories are added, each with their own differences. In the meantime, it’s a good example of not adding complexity before you actually need to.

After this code finishes, the database will contain the data properly cleaned and parsed in their respective data types (e.g. numbers instead of strings), which can then be displayed via the web interface and API.

How to transcribe a video using YouTube

26 de Fevereiro de 2018, 0:00 - sem comentários aindaTranscribing a video is a tedious and time-consuming task. It used to be impossible for machines to do, but this isn’t the case anymore, just ask Siri, Alexa, Google Home, or any other of the many voice assitants. The problem becomes how a regular user this technology to convert their own videos?

YouTube automatically generates subtitles for videos. What if we could use it to transcribe our own videos? Turns out we can, and it’s pretty easy (if you know how to use the command-line).

Pre-requisites

- Basic knowledge of the command-line

- youtube-dl

- Your video uploaded to YouTube (you can set it as Unlisted if you don’t want others to find it)

Instructions

We will use The Guardian’s An extraordinary year: 2017 in review video as an example, but this should work with any video. You can check the quality of YouTube’s automatic subtitle generation by enabling the video’s subtitles. To do so, click on the gear symbol, Subtitles/CC, and selection English (auto-generated). If you don’t see this option, maybe YouTube hasn’t generated the subtitles yet, so wait a bit and try again.

Step 1. Download the auto-generated subtitles

Our first step is to download these subtitles. We will use youtube-dl for it. Open your terminal, and write:

youtube-dl --skip-download --write-auto-sub --sub-lang en https://www.youtube.com/watch?v=B0l6lMoeFvg

The options we’re using are:

-

--skip-download: Don’t download the actual video, just the subtitles -

--write-auto-sub: Write the automatically generated subtitles -

--sub-lang en: Select the English subtitles

To see all possible languages, run youtube-dl --list-subs

https://www.youtube.com/watch?v=027ikJwr6fQ.

This command will download the subtitle in the current directory. It is a file

that ends in .vtt, in my case it’s named An extraordinary year - 2017 in

review-027ikJwr6fQ.en.vtt. If you open it, you should see something like:

WEBVTT

Kind: captions

Language: en

Style:

::cue(c.colorCCCCCC) { color: rgb(204,204,204);

}

::cue(c.colorE5E5E5) { color: rgb(229,229,229);

}

##

00:00:00.730 --> 00:00:04.950 align:start position:19%

[Music]

00:00:02.240 --> 00:00:08.690 align:start position:19%

after<c.colorE5E5E5><00:00:03.240><c> Trump's</c><00:00:03.570><c> election</c></c><c.colorCCCCCC><00:00:04.049><c> America</c><00:00:04.799><c> is</c></c>

00:00:04.950 --> 00:00:08.690 align:start position:19%

bracing<00:00:05.549><c> itself</c><00:00:05.700><c> for</c><00:00:06.029><c> conflict</c>

00:00:11.220 --> 00:00:15.840 align:start position:19%

Step 2. Convert the subtitle to a text file

The subtitle file we download contain everything we need, but it’s very hard to read, as it also contains timing information. There are many online tools that convert VTT files to TXT, leaving us with only the text. Just search for “convert vtt to txt” and you should find an option. The one I found was Subtitle Tools, but any should do the same.

Once you find the tool, upload the VTT file we downloaded in the last step, and download the resulting TXT file. This is the result:

[Music]

after Trump's election America is

bracing itself for conflict

I knew for a few families again I know

some that gray and there are no salmon

identify the distance between war and

civilian life a brand new investigation

into the tax lives of the rich and

famous

Africans have benefited from their

Which is impressively good, getting almost everything correctly.

Conclusion

Although the transcription quality probably isn’t as good as what a human transcriber would do, it can serve as a starting point. Instead of starting from scratch, you can review and fix what YouTube generated, hopefully saving you (a lot of) time. As YouTube improves, this method will generate better results.

If your results weren’t good, you might try improving your audio’s quality and uploading again. There is a limit on what it can/can’t do, but if your audio is clear and its language is well supported, you should be able to get decent results.

How to access the host's Docker Socket without root

13 de Janeiro de 2017, 0:00 - sem comentários aindaI needed to run a Docker container from inside another container. While it's possible to run Docker inside Docker, the recommended way is running siblings containers. The challenge now is how to create a Docker container in the host machine from inside another Docker container.

It's easy in theory. You just have to map the host's /var/run/docker.sock to

the container's, like:

docker run -v /var/run/docker.sock:/var/run/docker.sock IMAGE

If the Docker binary is installed in that container, any command like docker

ps will actually return the containers running in the host. The problem is that

only the container's root user is able to run those commands. This is because

/var/run/docker.sock is only readable by root and the docker group (if you

created this group, if not check this tutorial). To run

as a regular user, we need to add our user inside the container to the docker

group in the host. This is where things start getting messy. Let's see the

permissions for /var/run/docker.sock via the host machine and inside the

container:

$ ls -lah /var/run/docker.sock

srw-rw---- 1 root docker 0 Jan 13 16:44 /var/run/docker.sock

$ docker run -v /var/run/docker.sock:/var/run/docker.sock ubuntu ls -lah /var/run/docker.sock

srw-rw---- 1 root 999 0 Jan 13 19:44 /var/run/docker.sock

Notice that the group is named docker in the host, and 999 in the container?

This happens because the group exists only on the host, the container only sees

that the file is owned by group with GID (group ID) number 999, but doesn't know

its name. To be able to access this file, we need to:

- Create a group inside the container with the same GID as in the host;

- Add our non-root user to this group;

- Run the rest of the commands as the non-root user.

This can't be done at image build time because the GID depends on where the

container is running. It's 999 on my machine, but can be another number in

yours. We need to do this when the machine is ran with a script like:

#!/usr/bin/env bash

# Based on https://github.com/jenkinsci/docker/issues/196#issuecomment-179486312

# This only works if the docker group does not already exist

DOCKER_SOCKET=/var/run/docker.sock

DOCKER_GROUP=docker

REGULAR_USER=ubuntu

if [ -S ${DOCKER_SOCKET} ]; then

DOCKER_GID=$(stat -c '%g' ${DOCKER_SOCKET})

groupadd -for -g ${DOCKER_GID} ${DOCKER_GROUP}

usermod -aG ${DOCKER_GROUP} ${REGULAR_USER}

fi

# Change to regular user and run the rest of the entry point

su ${REGULAR_USER} -c "/usr/bin/env bash runner.sh ${@}"

This creates a docker group with the same GID as the one in

/var/run/docker.sock and assign the user REGULAR_USER to it. The last step

is to switch to the non-root user and continue with whatever process you were

running. In this example, I'm running runner.sh and giving it whatever

arguments we received. It'll be able to run any Docker command as if it was the

host.

Be aware that there're security implications in allowing

containers to access /var/run/docker.sock. Make sure you trust the containers'

code.

Where does the 2015 Knight News Challenge applicants come from?

2 de Outubro de 2015, 0:00 - sem comentários aindaThe Knight News Challenge is a grant by the Knight Foundation for "breakthrough ideas in news and information". Between September 8th and 30th of this year, 1.023 entries were submitted by 960 teams or individuals (myself included).

I wrote a scraper that gathers the data from these submissions. Its code and the resulting dataset are available on GitHub. My first curiosity was where the applicants come from. On the submission form there's a "Location" field, but it's not clear if it's related to where the team lives or where the project is going to be applied. It also is a simple textarea, so the data is messy.

To answer this question, I had to go through every entry and figure out what's the actual location. I used the team's location, and not where the project will be applied. For example, if a team from the USA submits a proposal for a project about Kenya, I still considered their country as the USA. If the team is distributed, a single proposal can have multiple countries.

I tried to be careful when cleaning the data, but there might have been some mistakes, so take these results with a grain of salt. I also couldn't determine the location of 7 projects (around 0,7% of the total).

First, let's see a choropleth map:

The 7 colors are divided by quantiles. As the data is heavily skewed, the values and the colors don't have a linear relation. Only the USA, with 687 projects, is on the darker band. The following band has 4 countries: UK (51), Germany (30), Brazil (29) and Canada (25). The top 11 countries are:

| Country | Count | % |

|---|---|---|

| USA | 687 | 58.17% |

| UK | 51 | 4.32% |

| Germany | 30 | 2.54% |

| Brazil | 29 | 2.45% |

| Canada | 25 | 2.12% |

| Mexico | 17 | 1.44% |

| Kenya | 16 | 1.34% |

| India | 16 | 1.34% |

| Argentina | 15 | 1.27% |

| Spain | 11 | 0.93% |

| Chile | 11 | 0.93% |

| Others | 284 | 25.08% |

| Total | 1181 | 100% |

There are 98 countries in total.

Remember that these numbers represent the number of projects that have at least one team member based in that country, so the total here can be different from the total number of entries (and it is 1181 vs 1023).

I don't know how the countries' distribution was in the previous News Challenges, so I don't have a baseline to compare. Still, it's interesting to see that, even though more than half of the entries had someone based in the USA in the team, almost every continent is represented in the top 11 countries. The only exception is Oceania, that is quite near, with 10 projects having someone based in Australia.

The data used here is available on GitHub here (unmodified, straight from the News Challenge page), and here (teams' countries added).